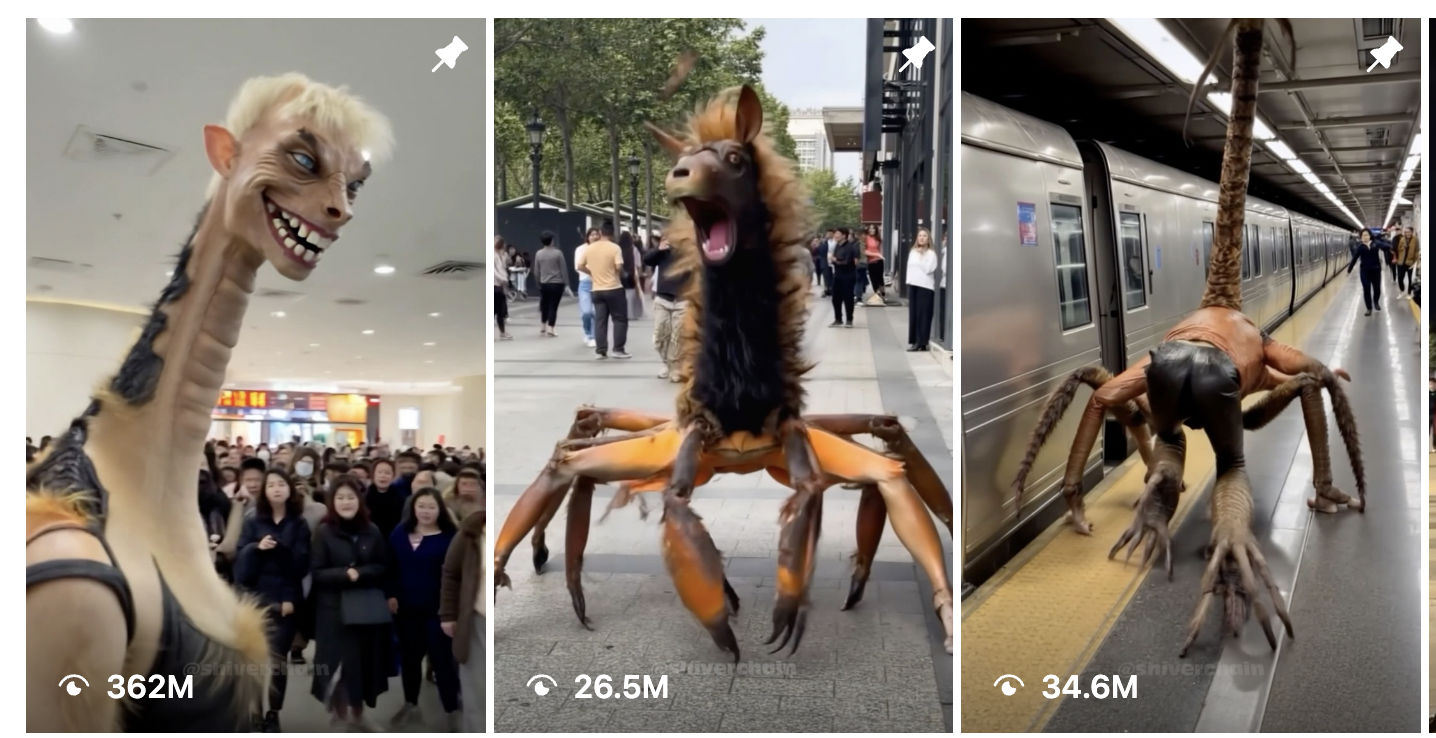

Consider, for a moment, that this AI-generated video of a bizarre creature turning into a spider, turning into a nightmare giraffe inside of a busy mall has been viewed 362 million times. That means this short reel has been viewed more times than every single article 404 Media has ever published, combined and multiplied tens of times.

0:00

This is what my Instagram Reels algorithm looks like now:

0:00

Any of these Reels could have been and probably was made in a matter of seconds or minutes. Many of the accounts that post them post multiple times per day. There are thousands of these types of accounts posting thousands of these types of Reels and images across every social media platform. Large parts of the SEO industry have pivoted entirely to AI-generated content, as has some of the internet advertising industry. They are using generative AI to brute force the internet, and it is working.

One of the first types of cyberattacks anyone learns about is the brute force attack. This is a type of hack that relies on rapid trial-and-error to guess a password. If a hacker is trying to guess a four-number PIN, they (or more likely an automated hacking tool) will guess 0000, then 0001, then 0002, and so on until the combination is guessed correctly.

As you may be able to tell from the name, brute force attacks are not very efficient, but they are effective. An attacker relentlessly hammers the target until a vulnerability is found or a password is guessed. The hacker is then free to exploit that target once the vulnerability is found.

The best way to think of the slop and spam that generative AI enables is as a brute force attack on the algorithms that control the internet and which govern how a large segment of the public interprets the nature of reality. It is not just that people making AI slop are spamming the internet, it’s that the intended “audience” of AI slop is social media and search algorithms, not human beings.

What this means, and what I have already seen on my own timelines, is that human-created content is getting almost entirely drowned out by AI-generated content because of the sheer amount of it. On top of the quantity of AI slop, because AI-generated content can be easily tailored to whatever is performing on a platform at any given moment, there is a near total collapse of the information ecosystem and thus of “reality” online. I no longer see almost anything real on my Instagram Reels anymore, and, as I have often reported, many users seem to have completely lost the ability to tell what is real and what is fake, or simply do not care anymore.

0:00

There is a dual problem with this: It not only floods the internet with shit, crowding out human-created content that real people spend time making, but the very nature of AI slop means it evolves faster than human-created content can, so any time an algorithm is tweaked, the AI spammers can find the weakness in that algorithm and exploit it.

Human creators making traditional YouTube videos, Instagram Reels, or TikToks are often making videos that are designed to appeal to a given platform’s algorithm, but humans are not nearly as good at this as AI. In Mr. Beast’s leaked handbook for employees, he reveals an obsession with the metrics that the YouTube algorithm values: “I spent basically 5 years of my life locked in a room studying virality on YouTube,” he writes. “The three metrics you guys need to care about is Click Thru Rate (CTR), Average View Duration (AVD), and Average View Percentage (AVP).”

Mr. Beast has to care very deeply about these things and needs to have an intuitive understanding of how they work because his videos are very expensive and time consuming to make, and a video that fails to perform is a huge waste of money and effort. Adjusting to what is working on a platform at any given moment is more art than science, and it’s a slow process, because human beings have a limited ability to feed the social media content machine. It takes us hours or days to write a single article; a human running an AI can generate dozens of images, photos, or articles in a matter of seconds. This allows a creator using AI to not necessarily have to worry about the quality of their videos, because these metrics (or any metric on any social media platform) can be brute forced. If a video fails it does not matter, because you can make 10 more of them in a matter of seconds.

This means that people running AI-generated accounts can have hundreds or thousands of entries into the algorithmic lottery every day, and can hammer the algorithm once they find something that works. Brute force.

“If you can figure out how to post content at scale, that means you can figure out how to exploit weaknesses at scale,” a former Meta employee who worked on content policy told me when I asked them about the AI spamming strategy for an article in August.

The McDonald’s Theory of YouTube Success

“Brute force” is not just what I have noticed while reporting on the spammers who flood Facebook, Instagram, TikTok, YouTube, and Google with AI-generated spam. It is the stated strategy of the people getting rich off of AI slop.

Every single day, I get marketing emails from a 17-year-old YouTube hustler named Daniel Bitton. His message, uniformly, is that it makes no financial sense to spend time making quality YouTube videos, and that making a large quantity of AI-generated Shorts is far more lucrative: “While others spend 5-6 hours making ONE ‘perfect’ video…We’re cranking out 8-10 shorts in under 30 minutes. How? By combining two simple ingredients: 1) Cutting-edge AI tools that do 90% of the work. 2) My simple 3-step formula that tells the AI exactly how to create viral Shorts. Total time I spend on average creating a potentially viral Short? 2-4 minutes. Max.”

Another: “YouTube doesn’t care about your production value. They care about FEEDING their audience. And their audience is hungry for SHORT content … Ready to start feeding the algorithm what it’s actually hungry for?”

Another: “The great thing about posting Shorts is AI. See, it practically does 90% of the work for you. All you need to do is give it a few pointers, press a few buttons, let it create videos for you, and let the algorithm do its thing.”

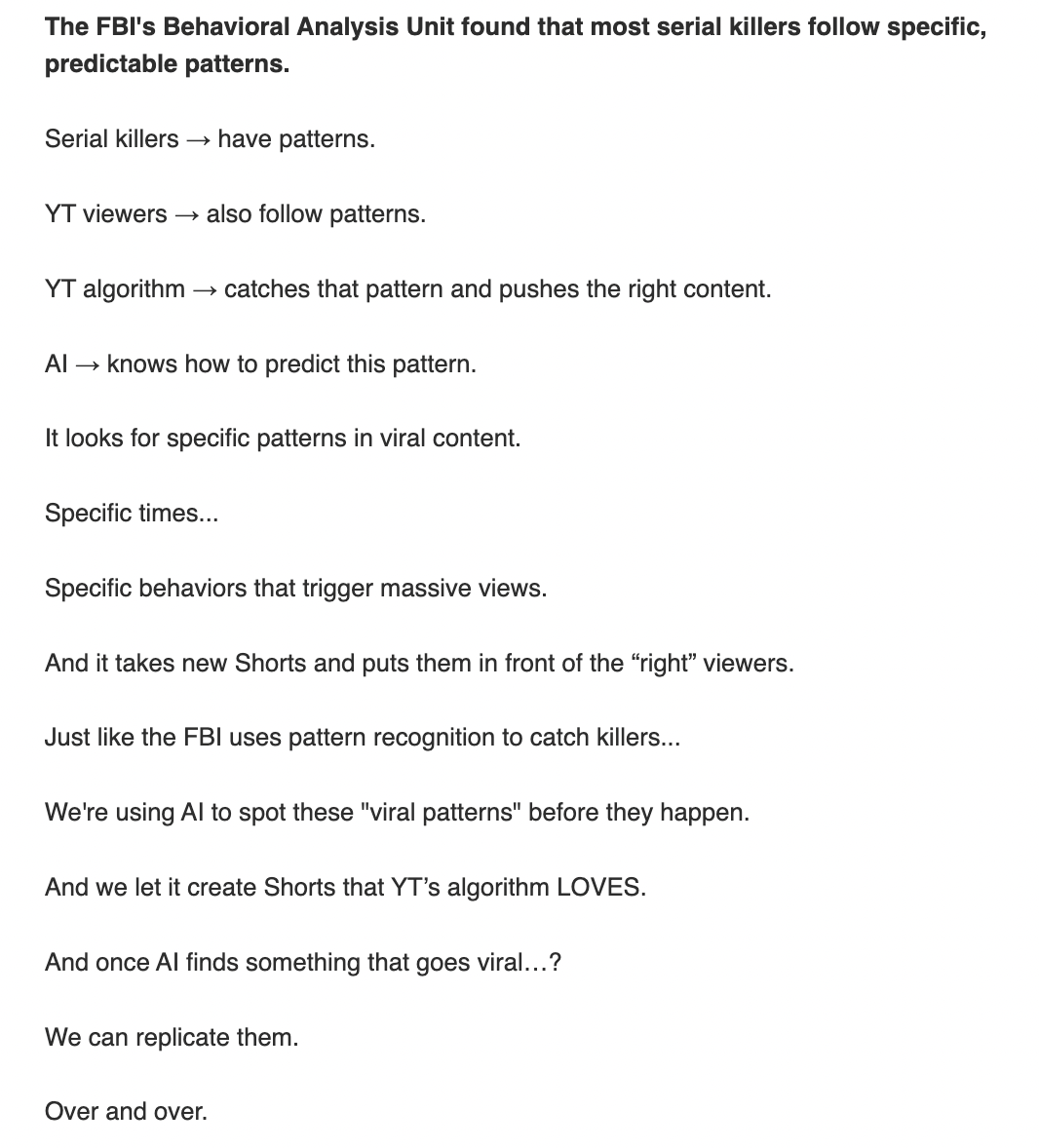

In another email, Bitton likens going viral on YouTube to the repeating patterns serial killers follow: “Serial killers → have patterns. YT viewers → also follow patterns. YT algorithm → catches that pattern and pushes the right content. AI → knows how to predict this pattern. We’re using AI to spot these ‘viral patterns’ before they happen. And we let it create Shorts that YT’s algorithm LOVES. And once AI finds something that goes viral…? We can replicate them. Over and over. Like clockwork.”

In another email, Bitton says YouTube’s Shorts algorithm is “broken,” and that you can “exploit” it while it’s broken “using simple AI-generated clips.”

“sounds about right bro”

Bitton’s colleague, Musa Mustafa (who I wrote about a year ago), advertises his own “Media Metas” strategy and community for spamming TikTok, YouTube, and Instagram. In recent months, the entirety of his marketing has focused on using AI: “The 2025 creator is just chilling, using AI to generate a week’s worth of content in 30 mins,” Mustafa wrote in a recent marketing email. “Since AI is literally trained on the BEST content…It gets you BETTER results. If you’re looking to make money online by creating content, Use AI. Simple as that.”

Both Mustafa and Bitton tell users that it makes no sense trying to become the next Mr. Beast, who they see as a singular figure. “All these ‘premium’ channels with perfect production? They’re slowly dying. Why? Because they need a $5,000 camera, studio lighting, professional editing, days to produce… And for what?,” Bitton writes. “To compete with Mr Beast and barely get 1000 views? Heck even if they get 100k views, it would still not be worth it. Because it doesn’t even compare with what creators who pump out consistent Shorts make.”

I spoke briefly to Mustafa, and asked him if he was using AI tools for “brute-forcing social media algorithms.” He said “sounds about right bro. I think that line of thought is correct. I agree with it.”

Mustafa recommends the “‘sad hot dog’ method to going viral,” likening both social media algorithms and users to hungry people going to 7-Eleven at 2 a.m. looking for anything to eat: “when you’re hungry at 2am, even a sad-looking hot dog tastes BETTER than any Michelin meal that only gives you 2 bites. Well, TikTok works pretty much the same way. Your audience isn’t expecting (or even wanting) perfectly polished videos.”

“When’s the last time you saw a viral TikTok and thought: ‘Wow, the color grading on this is incredible!’ Never. Because nobody cares. Which is actually GOOD news for you because it means you can make thousands focusing on quantity, NOT quality.”

Bitton, meanwhile, posits the “McDonald’s Theory of YouTube Success,” and the “Gas Station Sushi” approach to content, which suggest that AI slop is good enough, and that human beings doting over quality videos are wasting their time and are destined to fail.

“I think the brute force metaphor works well because it really is a game of numbers. If you have a Gen AI, you can make content at scale where you change the script slightly and then just play this cat-and-mouse game with people who are detecting the fraud,” Alex Hanna, Director of Research at the Distributed AI Research Institute told me. “You’re really able to exploit any blind spots in the algorithm, then kind of spam that type of content.”

The Platforms Are Brute Forcing Themselves

My brute force attack metaphor isn’t perfect, because with a brute force attack, the ones being attacked try to stop what’s happening. In this case, the platforms are both paying spammers to brute force their platforms and increasingly have realized that they themselves can brute force their users with AI-generated ads that they can help companies make and optimize.

In that sense, there isn’t even a cat-and-mouse game occurring. Platforms and new types of startup companies aren’t trying to stop this spam. They benefit from it, enable it, and worst of all, are finding ways to supercharge it. Brute forcing the algorithms with AI is not just a trick that entrepreneurial teenagers have discovered. The social media giants who themselves make the algorithms that are under attack are not only paying AI spammers to slopify their platforms, they are building tools that will help them spam more profitably. This means that, unlike most security vulnerabilities that are urgently fixed, there is no indication that any help is coming.

A/B testing is a strategy where two (or more) types of content run concurrently, and the one that performs better in an algorithm is then pushed. Some news outlets do this with headlines and images, where they will use a tool that lets them post something on social media with multiple headline and image combinations, and then the one that performs better becomes the main one that is being pushed. Advertisers do this too, where they might try different versions of an ad, see which one gets more clicks or creates more sales with a certain audience, and then they spend more money pushing the ones that perform best.

0:00

For years, advertisers were able to make several different versions of a given ad with slightly different calls to action, different images, different captions and different targeting. But Meta recently released a tool called “Advantage+” where, instead of making a few different versions of an ad, an advertiser can use generative AI to make orders of magnitude more versions of ads that are even more microtargeted to different users. A/B testing has become A/B/C/D/E/F/G etc testing, and advertisers can then spend money only on ads that have been perfectly calibrated to perform well.

While Meta’s own user-facing generative AI tools have been relentlessly mocked, it credits its advertiser-facing AI targeting and generative AI tools as being behind much of its revenue growth over the last few quarters.

“Advantage+ creative is another area where we’re seeing momentum. More than 4 million advertisers are now using at least one of our generative AI ad creative tools, up from one million six months ago,” Meta CEO Susan Li said in a January earnings call. “There has been significant early adoption of our first video generation tool that we rolled out in October, Image Animation, with hundreds of thousands of advertisers already using it monthly. And so, in the Core Ads business, the Gen AI tools that we have built here that will help us enable businesses to make ads significantly more customized at scale, which is going to accrue to ad performance, that’s a place where, again, we’re already seeing promising results in both performance gains and adoption.”

In January, The Information reported that Meta is working directly with ad agencies to create additional generative AI tools. And Meta’s Advantage+ allows advertisers to “bulk create up to 50 ads at one time,” and to use “Generative AI enhancements” that tweak images, backgrounds, image aspect ratios, image animations, text variations, and calls to action: “When you apply Advantage+ creative in Meta Ads Manager and Meta Business Suite, your images and videos are optimized to versions your audience is more likely to interact with,” Meta tells advertisers.

I have also been served ads from startups who say they will help companies generate hundreds of variations of ads from Google Drive dumps of a brand’s assets and to put money behind the ones that perform best. A company called Blaze AI tells companies that it helps them “steal their competitors’ content” with an “AI tool that helps you replicate your competitors’ top-performing posts and tailoring it directly to your brand” as an Instagram Reel, TikTok, blog post, LinkedIn post, etc. “The AI does the work for you,” they say. “The AI learns your brand voice so that every piece of media feels authentic … it’s infinite.” A company called Go Mega AI advertises that it can help you generate “hundreds of articles a month without doing anything” by analyzing Reddit posts, YouTube videos, and a brand’s own website. “24 hours later, I had a month of content already scheduled,” the ad says.

Content for Algorithms, Not Humans

Even though many of the AI images and reels I see have millions of views, likes, and comments, it is not clear to me that people actually want this, and many of the comments I’ve seen are from people who are disgusted or annoyed. The strategy with these types of posts is to make a human linger on them long enough to say to themselves “what the fuck,” or to be so horrified as to comment “what the fuck,” or send it to a friend saying “what the fuck,” all of which are signals to the algorithm that it should boost this type of content but are decidedly not signals that the average person actually wants to see this type of thing. It’s brute forcing a weakness in the Instagram algorithm that takes any engagement at all as positive signals, and the people creating this type of content know this.

0:00

Decentralized, upstart social media platforms like Bluesky and Mastodon are becoming more popular because of a backlash to algorithmified, monopolistic social media platforms. My friends, many of whom are journalists or who work in adjacent industries, are increasingly spending more time in group texts talking to real humans, or supporting independent, newsletter-centric media outlets like ours. RSS is coming back, to some degree. But the problem here is one of scale. From our perspective, 404 Media has been a huge success, our articles are widely shared, and our business is sustainable. But it is nearly impossible for me to fathom the scale of platforms like Instagram, YouTube, TikTok, and Facebook, and the hold that these platforms have on billions of people, which is how it becomes the case that an AI video of a spider demon transformation in a mall reaches orders of magnitude more people in a week than I have with all of the reporting I’ve ever done in my entire life.

Whenever I write articles about AI slop, people ask me where this is going, and what the end goal of this is for, let’s say, a company like Meta. First, I think we as a society need to realize that a huge portion of what is being done with generative AI tools is for the type of thing I have described in this article, or for nonconsensual, AI-generated intimate imagery. It is possible that generative AI will bring some of the work efficiencies and breakthroughs that venture capitalists and big tech have been hyping for years, but we must grapple with the fact that the main ways that people encounter generative AI is as brute force internet pollution.

For Meta, I do not think its plan is too hard to figure out, because Mark Zuckerberg has been clear about his intentions: He believes that the future of “social media” is a bunch of human beings scrolling through and arguing about AI-generated content on his many platforms. In many ways, that future is already here. But here is what I think comes next:

Meta is an ads business that makes the most money when it can keep people on its platform and engaged for as long as possible. Advertisers spend more money when their ads are more effective, and their ads are more effective when they are very narrowly targeted to a person’s interests. The best way to do this is to learn as much about its users, and to then deliver both content and advertisements that precisely target any individual user.

There are billions of people on Meta’s platforms making billions of pieces of content, but even that is not enough. The goal is to move toward a world where a never-ending feed of hyper niche content can be delivered directly to the people who are into that type of content.

We are already seeing this in the AI influencer and AI porn space on Instagram, which, per usual, is far ahead of the curve of other industries. I have stumbled on accounts where old, AI-generated men worship hot, young, AI-generated women’s feet; accounts where AI-generated octopuses make out with AI-generated women and a separate account where AI-generated fish make out with AI-generated women; AI orcs get married to AI-generated waifus, etc. Any fetish or interest that any person could possibly have, AI can generate endlessly, and a social media algorithm can deliver directly to you. As Sam wrote when we launched 404 Media, AI porn is pushing to the “edge of knowledge,” and the rest of the AI content industry is following suit.

Better still, AI-generated content that is generated directly on Meta’s own platforms will have content tags, metadata, and prompting data that will more easily allow the algorithm to deliver cute AI-generated golden retrievers to golden retriever owners alongside pet food ads that have golden retrievers in them for the golden retriever owners and rat terriers in them for rat terrier owners. It will deliver AI-generated doomsday, conspiracy, and natural disaster content to people who linger on AI-generated videos of wildfires and hurricanes, interspersed with AI-generated ads for preppers. Brave teens will get AI-generated creepypasta and jumpscare content. Religious people will get AI Jesus, the devil, and the Pope. Trump fans will get Elon Musk inspiration porn interspersed with AI-generated ads for Trump coins.

The combinations and possibilities are endless, and this type of thing is already happening. Social media algorithms are being brute forced with AI content and soon our very reality will be, too.